The bug I talked about a little while ago has now also had the fix I wrote committed to the mysql-trunk 5.5.6-m3 repository.

Author Archives: Stewart Smith

HailDB 2.0.0 released!

(Reposted from the HailDB Blog. See also the announcement on the Drizzle Blog.)

We’ve made our first HailDB release! We’ve decided to make this a very conservative release. Fixing some minor bugs, getting a lot of compiler warnings fixed and start to make the name change in the source from Embedded InnoDB to HailDB.

Migrating your software to use HailDB is really simple. In fact, for this release, it shouldn’t take more than 5 minutes.

Highlights of this release:

- A lot of compiler warnings have been fixed.

- The build system is now pandora-build.

- some small bugs have been fixed

- Header file is now haildb.h instead of innodb.h

- We display “HailDB” instead of “Embedded InnoDB”

- Library name is libhaildb instead of libinnodb

- It is probably binary compatible with the last Embedded InnoDB release, but we don’t have explicit tests for that, so YMMV.

Check out the Launchpad page on 2.0.0 and you can download the tarball either from there or right here:

- haildb-2.0.0.tar.gz

MD5:  183b81bfe2303aed435cdc8babf11d2b

SHA1: Â 065e6a2f2cb2949efd7b8f3ed664bc1ac655cd75

540W of LED rope for monorail track

Testing how much power the rope will pull off the generators

HOWTO screw up launching a free software project

Josh Berkus gave a great talk at linux.conf.au 2010 (the CFP for linux.conf.au 2011 is open until August 7th) entitled “How to destroy your community” (lwn coverage). It was a simple, patented, 10 step program, finely homed over time to have maximum effect. Each step is simple and we can all name a dozen companies that have done at least three of them.

Simon Phipps this past week at OSCON talked about Open Source Continuity in practice – specifically mentioning some open source software projects that were at Sun but have since been abandoned by Oracle and different strategies you can put in place to ensure your software survives, and check lists for software you use to see if it will survive.

So what can you do to not destroy your community, but ensure you never get one to begin with?

Similar to destroying your community, you can just make it hard: “#1 is to make the project depend as much as possible on difficult tools.”

#1 A Contributor License Agreement and Copyright Assignment.

If you happen to be in the unfortunate situation of being employed, this means you get to talk to lawyers. While your employer may well have an excellent Open Source Contribution Policy that lets you hack on GPL software on nights and weekends without a problem – if you’re handing over all the rights to another company – there gets to be lawyer time.

Your 1hr of contribution has now just ballooned. You’re going to use up resources of your employer (hey, lawyers are not cheap), it’s going to suck up your work time talking to them, and if you can get away from this in under several hours over a few weeks, you’re doing amazingly well – especially if you work for a large company.

If you are the kind of person with strong moral convictions, this is a non-starter. It is completely valid to not want to waste your employers’ time and money for a weekend project.

People scratching their own itch, however small is how free software gets to be so awesome.

I think we got this almost right with OpenStack. If you compare the agreement to the Apache License, there’s so much common wording it ends up pretty much saying that you agree you are able to submit things to the project under the Apache license. Â This (of course) makes the entire thing pretty redundant as if people are going to be dishonest about submitting things under the Apache licnese there’s no reason they’re not going to be dishonest and sign this too.

You could also never make it about people – just make it about your company.

#2 Make it all about the company, and never about the project

People are not going to show up, do free work for you to make your company big, huge and yourself rich.

People are self serving. They see software they want only a few patches away, they see software that serves their company only a few patches away. They see software that is an excellent starting point for something totally different.

I’m not sure why this is down at number three… it’s possibly the biggest one for danger signs that you’re going to destroy something that doesn’t even yet exist…

#3 Open Core

This pretty much automatically means that you’re not going to accept certain patches for reasons of increasing your own company’s short term profit. i.e. software is no longer judged on technical merits, but rather political ones.

There is enough politics in free software as it is, creating more is not a feature.

So when people ask me about how I think the OpenStack launch went, I really want people to know how amazing it can be to just not fuck it up to begin with. Initial damage is very, very hard to ever undo. The number of Open Source software projects originally coming out of a company that are long running, have a wide variety of contributors and survive the original company are much smaller than you think.

PostgreSQL has survived many companies coming and going around it, and is stronger than ever. MySQL only has a developer community around it almost in spite of the companies that have shepherded the project. With Drizzle I think we’ve been doing okay – I think we need to work on some things, but they’re more generic to teams of people working on software in general rather than anything to do with a company.

A tale of a bug…

So I sometimes get asked if we funnel back bug reports or patches back to MySQL from Drizzle. Also, MariaDB adds some interest here as they are a lot closer (and indeed compatible with) to MySQL. With Drizzle, we have deviated really quite heavily from the MySQL codebase. There are still some common areas, but they’re getting rarer (especially to just directly apply a patch).

Back in June 2009, while working on Drizzle at Sun, I found a bug that I knew would affect both. The patch would even directly apply (well… close, but I made one anyway).

So the typical process of me filing a MySQL bug these days is:

- Stewart files bug

- In the next window of Sveta being awake, it’s verified.

This happened within a really short time.

Unfortunately, what happens next isn’t nearly as awesome.

Namely, nothing. For a year.

So a year later, I filed it in launchpad for MariaDB.

So, MariaDB is gearing up for a release, it’s a relatively low priority bug (but it does have a working, correct and obvious patch), within 2 months, Monty applied it and improved the error checking around it.

So MariaDB bug 588599 is Fix Committed (June 2nd 2010 – July 20th 2010), MySQL Bug 45377 is still Verified (July 20th 2009 – ….).

(and yes, this tends to be a general pattern I find)

But Mark says he gets things through… so yay for him.2

At OSCON

I’m at OSCON this week. Come say hi and talk Drizzle, Rackspace, cloud, photography, vegan food or brewing.

linux.conf.au 2011 CFP Open!

Head on over to http://lca2011.linux.org.au/ and check it out!

You’ve got until August 7th to put in a paper, miniconf, poster or tutorial.

Things I’d like to see come from my kinda world:

- topics on running large numbers of machines

- latest in large scale web infrastructure

- latest going on in the IO space: (SSD, filesystems, SSD as L2 cache)

- Applications of above technologies and what it means for application performance

- Scalable and massive tcp daemons (i.e. Eric should come talk on scalestack)

- exploration of pain points in current technologies and discussion on ways to fix them (from people really in the know)

- A Hydra tutorial: starting with stock Ubuntu lucid, and exiting the tutorial with some analysis running on my project.

- Something that completely takes me off guard and is awesome.

I’d love to see people from the MySQL, Drizzle and Rackspace worlds have a decent presence. For those who’ve never heard of/been to an LCA before: we reject at least another whole conference worth of papers. It’s the conference on the calendar that everything else moves around.

Dynamic Range Theory

A great video podcast is Meet the GIMP. It’s quite accessible and has some useful information. The recent(ish) episode on Dynamic Range Theory is useful if you’re wondering why images look different through your eyes, on an LCD and on paper (and what the hell the difference between RAW and JPEG is).

Kodak Ektar 100 – fun with colour negative film

I’ve been writing a bit about my adventures with Black & White film and developing myself. I haven’t (yet) developed my own colour negative (C41 process) film. I do hope to do so at some point in the future – even though I can get the local lab to do it for $4 a roll, it’s nice to be able to do this yourself.

When I was young, I also took photos. I still use that camera sometimes too. Recently I’ve been scanning in the first ever slide film I shot – a roll of Kodachrome when I was 8 years old. I do like the look of Kodachrome, and am sad that it’s going away.

Last year, when I was in the US for Burning Man, I got introduced to Kodak Ektar 100. With the promise of colours that remind you of Kodachrome, I grabbed a bunch and headed to San Francisco and then Burning Man.

I liked the look of a bunch of stuff I shot. For example:

Recently, on my trip to Hong Kong, I shot some too. The above was all shot with an old Ricoh SLR, when I was in Hong Kong I used my Nikon F80 and the 50mm f1.8 lens.

One of my favourites was of this little statue:

In Hong Kong a lot of buildings are interconnected so you can walk between them without having to go outside (where it’s hot and humid). There are bits of sculpture in the buildings around the Rackspace office. This is one near the hotel I was staying with. During the morning and afternoons, these walkways are filled with people, exactly like streets…. but a floor above and indoors.

I’m adding more shots from Hong Kong to my Flickr Photstream as the days go on.

I really like this film. I even don’t mind it for people… the first was the test shot (have I loaded correctly, things winding, wonder if this shot will work) in the hotel lobby in San Francisco. Leah:

I should learn to scan better (I have since, this was probably the first image I scanned using my scanner, certainly the first Ektar frame). Another two people images I like on Ektar are:

Dare I say that I always seem to find the Ektar colours to be relaxed? I like it. The blues really shine through. Reds are also really nice (heck, I even love the yellow), and I plan to go and investigate how I can combine these colours in interesting ways on film.

PBMS in Drizzle

Some of you may have noticed that blob streaming has been merged into the main Drizzle tree recently. There are a few hooks inside the Drizzle kernel that PBMS uses, and everything else is just in the plug in.

For those not familiar with PBMS it does two things: provide a place (not in the table) for BLOBs to be stored (locally on disk or even out to S3) and provide a HTTP interface to get and store BLOBs.

This means you can do really neat things such as have your BLOBs replicated, consistent and all those nice databasey things as well as easily access them in a scalable way (everybody knows how to cache HTTP).

This is a great addition to the AlsoSQL arsenal of Drizzle. I’m looking forward to it advancing and being adopted (now much easier that it’s in the main repository)

Reciprocity failure

“As the light level decreases out of the reciprocity range, the increase in duration, and hence of total exposure, required to produce an equivalent response becomes higher than the formula states” (see Wikipedia entry).

Those of us coming from having shot a lot of digital, especially when you’re experience of low light photography is entirely with digital are going to get a bit of a shock at some point. Why didn’t this image work exactly as I wanted it to? Why isn’t there as much.. well.. image!

You’ll probably read things like “you don’t need to worry about it until you’re into really long exposures” or maybe you’ll start reading the manufacturers documents on the properties of the film and just go “whatever”.

Ilford Delta 3200 Professional is one of the films where you have to start caring about it pretty quickly. Basically, you need to overexpose once you start getting exposures greater than ~1second.

In decent light, handheld with a pretty quick exposure, things look great:

But whack things on a Tripod and have a bit of a longer exposure you’re going to start failing a bit. Even though I like this shot, I find that it’s just not quite got everything I would have liked to capture. Just exposing a bit more I think would have done it. I had to do too much in scanning and the GIMP…

So I learnt something with this roll, which is always good.

No, I haven’t forgotten digital (darktable for the epic win)

This was my first real play with darktable. It’s a fairly new “virtual lighttable and darkroom for photographers” but if you are into photography and into freedom, you need to RUN (not walk) to the install page now.

My first real use of it was for a simple image that I took from my hotel room when I was in Hong Kong last week. I whacked the fisheye on the D200, walked up to the window (and then into it, because that’s what you do when looking through a fisheye) and snapped the street scene below as the sun was going away.

I’d welcome feedback… but I kinda like the results, especially for a shot that wasn’t thought about much at all (it was intended as a just recording my surroundings shot).

The second shot I had a decent go at was one I snapped while out grabbing some beers with some of the Rackspace guys (Hi Tim and Eddie!) in Hong Kong. Darktable let me develop the RAW image from my D200 and get exactly the image I was looking for…. well, at least to my ability so far. Very, very impressed.

Being a photographer and using Ubuntu/GNOME has never been so exciting. Any inclination I had of setting up a different OS for that “real” photo stuff is completely gone.

(Incidently, I will be talking about darktable at LUV in July)

More film developing

I’ve developed some more film! Here’s some shots from last time I was in Hobart. All shot on Ilford HP5+, which I quite like. I’m still getting used to this developing thing and next time should be much better!

The HP5+ was shot at the box speed of 400 with my Nikon F80 and the wonderful 50mm f1.8 lens. I developed in R09 OneShot (Rodinal) for the standard 6 minutes that the Ilford box tells me to. I used my Epson V350 Photo scanner to scan the negatives with iscan. I am wishing for better scanning software. *seriously* wishing.

These first four are probably going to be recognisable to anybody who knows Salamanca.

For those who love the Lark Distillery or English Bitter, I snapped a shot of (one of) the pint I had:

So I’d count this as fairly successful! Of course, need some animal shots:

… and there was a stop at a Sustainability Expo that had a surprising lack of bountiful vegan food when we got there…

I have to say, pulling that film out and seeing an image is incredibly rewarding.

If you want to know more about how I do it all on Linux, come to my talk at LUV this upcoming July 6th.

Drizzle @ Velocity (seemed to go well)

Monty’s talk at Velocity 2010 seemed to go down really well (at least from reading the agile admin entry on Drizzle). There are a few great bits from this article that just made me laugh:

“Oracle’s “run Java within the database†is an example of totally retarded functionality whose main job is to ruin your life”

Love it that we’re managing to get the message out.

ENUM now works properly (in Drizzle)

Over at the Drizzle blog, the recent 2010-06-07 tarball was announced. This tarball release has my fixes for the ENUM type, so that it now works as it should. I was quite amazed that such a small block of code could have so many bugs! One of the most interesting was the documented limit we inherited from MySQL (see the MySQL Docs on ENUM) of a maximum of 65,535 elements for an ENUM column.

This all started out from a quite innocent comment of Jay‘s in a code review for adding support for the ENUM data type to the embedded_innodb engine. It was all pretty innocent… saying that I should use a constant instead of the magic 0x10000 number as a limit on an assert for sanity of values getting passed to the engine. Seeing as there wasn’t a constant already in the code for that (surprise number 1), I said I’d fix it properly in a separate patch (creating a bug for it so it wouldn’t get lost) and the code went in.

So, now, a few weeks after that, I got around to dealing with that bug (because hey, this was going to be an easy fix that’ll give me a nice sense of accomplishment). A quick look in the Field_enum code raised my suspicions of bugs… I initially wondered if we’d get any error message if a StorageEngine returned a table definition that had too many ENUM elements (for example, 70,000). So, I added a table to the tableprototester plugin (a simple dummy engine that is loaded for testing the parsing of specially constructed table messages) that had 70,000 elements for a single ENUM column. It didn’t throw an error. Darn. It did, however, have an incredibly large result for SHOW CREATE TABLE.

Often with bugs like this I may try to see if the problem is something inherited from MySQL. I’ll often file a bug with MySQL as well if that’s the case. If I can, I’ll sometimes attach the associated patch from Drizzle that fixes the bug, sometimes with a patch directly for and tested on MySQL (if it’s not going to take me too long). If these patches are ever applied is a whole other thing – and sometimes you get things like “each engine is meant to have auto_increment behave differently!” – which doesn’t inspire confidence.

But anyway, the MySQL limit is somewhere between 10850 and 10900. This is not at all what’s documented. I’ve filed the appropriate bug (Bug #54194) with reproducible test case and the bit of problematic code. It turns out that this is (yet another) limit of the FRM file. The limit is “about 64k FRM”. The bit of code in MySQL that was doing the checking for the ENUM limit was this:

/* Hack to avoid bugs with small static rows in MySQL */

reclength=max(file->min_record_length(table_options),reclength);

if (info_length+(ulong) create_fields.elements*FCOMP+288+

n_length+int_length+com_length > 65535L || int_count > 255)

{

my_message(ER_TOO_MANY_FIELDS, ER(ER_TOO_MANY_FIELDS), MYF(0));

DBUG_RETURN(1);

}

So it’s no surprise to anyone how this specific limit (the number of elements in an ENUM) got missed when I converted Drizzle from using an FRM over to a protobuf based structure.

So a bunch of other cleanup later, a whole lot of extra testing and I can pretty confidently state that the ENUM type in Drizzle does work exactly how you think it would.

Either way, if you’re getting anywhere near 10,000 choices for an ENUM column you have no doubt already lost.

New CREATE TABLE performance record!

4 min 20 sec

So next time somebody complains about NDB taking a long time in CREATE TABLE, you’re welcome to point them to this :)

- A single CREATE TABLE statement

- It had ONE column

- It was an ENUM column.

- With 70,000 possible values.

- It was 605kb of SQL.

- It ran on Drizzle

This was to test if you could create an ENUM column with greater than 216 possible values (you’re not supposed to be able to) – bug 589031 has been filed.

How does it compare to MySQL? Well… there are other problems (Bug 54194 – ENUM limit of 65535 elements isn’t true filed). Since we don’t have any limitations in Drizzle due to the FRM file format, we actually get to execute the CREATE TABLE statement.

Still, why did this take four and a half minutes? I luckily managed to run poor man’s profiler during query execution. I very easily found out that I had this thread constantly running check_duplicates_in_interval(), which does a stupid linear search for duplicates. It turns out, that for 70,000 items, this takes approximately four minutes and 19.5 seconds. Bug 589055 CREATE TABLE with ENUM fields with large elements takes forever (where forever is defined as a bit over four minutes) filed.

So I replaced check_duplicates_in_interval() with a implementation using a hash table (boost::unordered_set actually) as I wasn’t quite immediately in the mood for ripping out all of TYPELIB from the server. I can now run the CREATE TABLE statement in less than half a second.

So now, I can run my test case in much less time and indeed check for correct behaviour rather quickly.

I do have an urge to find out how big I can get a valid table definition file to though…. should be over 32MB…

A warning to Solaris users…. (fsync possibly doesn’t)

Read the following:

- Oracle/Sun ZFS Data Loss – Still Vulnerable

- OpenSolaris Bug 6880764

- Data loss running Oracle on ZFS on Solaris 10, pre 142900-09

Linux has its fair share of dumb things with data too (ext3 not defaulting to using write barriers is a good one). This is however particularly nasty… I’d have really hoped there were some good tests in place for this.

This should also be a good warning to anybody implementing advanced storage systems: we database guys really do want to be able to write things reliably and you really need to make sure this works.

So, Stewart’s current list of stupid shit you have to do to ensure a 1MB disk write goes to disk in a portable way:

- You’re a database, so you’re using O_DIRECT

- Use < 32k disk writes

- fsync()

- write 32-64mb of sequential data to hopefully force everything out of the drive write cache and onto the platter to survive power failure (because barriers may not be on). Increase this based on whatever caching system happens to be in place. If you think there may be battery backed RAID… maybe 1GB or 2GB of data writes

- If you’re extending the file, don’t bother… that especially seems to be buggy. Create a new file instead.

Of course you could just assume that the OS kind of gets it right…. *laugh*

BLOBS in the Drizzle/MySQL Storage Engine API

Another (AFAIK) undocumented part of the Storage Engine API:

We all know what a normal row looks like in Drizzle/MySQL row format (a NULL bitmap and then column data):

Nothing that special. It’s a fixed sized buffer, Field objects reference into it, you read out of it and write the values into your engine. However, when you get to BLOBs, we can’t use a fixed sized buffer as BLOBs may be quite large. So, the format with BLOBS is the bit in the row is a length of the blob (1, 2, 3 or 4 bytes – in Drizzle it’s only 3 or 4 bytes now and soon only 4 bytes once we fix a bug that isn’t interesting to discuss here). The Second part of the in-row part is a pointer to a location in memory where the BLOB is stored. So a row that has a BLOB in it looks something like this:

The size of the pointer is (of course) platform dependent. On 32bit machines it’s 4 bytes and on 64bit machines it’s 8 bytes.

Now, if I were any other source of documentation, I’d stop right here.

But I’m not. I’m a programmer writing a Storage Engine who now has the crucial question of memory management.

When your engine is given the row from the upper layer (such as doInsertRecord()/write_row()) you don’t have to worry, for the duration of the call, the memory will be there (don’t count on it being there after though, so if you’re not going to immediately splat it somewhere, make your own copy).

For reading, you are expected to provide a pointer to a location in memory that is valid until the next call to your Cursor. For example, rnd_next() call reads a BLOB field and your engine provides a pointer. At the subsequent rnd_next() call, it can free that pointer (or at doStopTableScan()/rnd_end()).

HOWEVER, this is true except for index_read_idx_map(), which in the default implementation in the Cursor (handler) base class ends up doing a doStartIndexScan(), index_read(), doEndIndexScan(). This means that if a BLOB was read, the engine could have (quite rightly) freed that memory already. In this case, you must keep the memory around until either a reset() or extra(HA_EXTRA_FLUSH) call.

This exception is tested (by accident) by a whole single query in type_blob.test – a monster of a query that’s about a seven way join with a group by and an order by. It would be quite possible to write a fairly functional engine and completely miss this.

Good luck.

This blog post (but not the whole blog) is published under the Creative Commons Attribution-Share Alike License. Attribution is by linking back to this post and mentioning my name (Stewart Smith).

nocache LD_PRELOAD

Want to do something like “cp big_file copy_of_big_file” or “tar xfz big_tarball.tar.gz” but without thrashing your cache?

Enrico Zini has a nice little LD_PRELOAD called nocache.

$ nocache tar xfz foo.tar.gz

Goes well with libeatmydata. A pair of tools for compensating for your Operating System casually hating you.

I imagine people will love this when taking database backups.

Using the row buffer in Drizzle (and MySQL)

Here’s another bit of the API you may need to use in your storage engine (it also seems to be a rather unknown. I believe the only place where this has really been documented is ha_ndbcluster.cc, so here goes….

Drizzle (through inheritance from MySQL) has its own (in memory) row format (it could be said that it has several, but we’ll ignore that for the moment for sanity). This is used inside the server for a number of things. When writing a Storage Engine all you really need to know is that you’re expected to write these into your engine and return them from your engine.

The row buffer format itself is kind-of documented (in that it’s mentioned in the MySQL Internals documentation) but everywhere that’s ever pointed to makes the (big) assumption that you’re going to be implementing an engine that just uses a more compact variant of the in-memory row format. The notable exception is the CSV engine, which only ever cares about textual representations of data (calling val_str() on a Field is pretty simple).

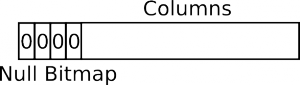

The basic layout is a NULL bitmap plus the data for each non-null column:

Except that the NULL bitmap is byte aligned. So in the above diagram, with four nullable columns, it would actually be padded out to 1 byte:

Except that the NULL bitmap is byte aligned. So in the above diagram, with four nullable columns, it would actually be padded out to 1 byte:

Each column is stored in a type-specific way.

Each Table (an instance of an open table which a Cursor is used to iterate over parts of) has two row buffers in it: record[0] and record[1]. For the most part, the Cursor implementation for your Storage Engine only ever has to deal with record[0]. However, sometimes you may be asked to read a row into record[1], so your engine must deal with that too.

A Row (no, there’s no object for that… you just get a pointer to somewhere in memory) is made up of Fields (as in Field objects). It’s really made up of lots of things, but if you’re dealing with the row format, a row is made up of fields. The Field objects let you get the value out of a row in a number of ways. For an integer column, you can call Field::val_int() to get the value as an integer, or you can call val_str() to get it as a string (this is what the CSV engine does, just calls val_str() on each Field).

The Field objects are not part of a row in any way. They instead have a pointer to record[0] stored in them. This doesn’t help you if you need to access record[1] (because that can be passed into your Cursor methods). Although the buffer passed into various Cursor methods is usually record[0] it is not always record[0]. How do you use the Field objects to access fields in the row buffer then? The answer is the Field::move_field_offset(ptrdiff_t) method. Here is how you can use it in your code:

ptrdiff_t row_offset= buf - table->record[0];(**field).move_field_offset(row_offset);(do things with field)(**field).move_field_offset(-row_offset);

Yes, this API completely sucks and is very easy to misuse and abuse – especially in error handling cases. We’re currently discussing some alternatives for Drizzle.

This blog post (but not the whole blog) is published under the Creative Commons Attribution-Share Alike License. Attribution is by linking back to this post and mentioning my name (Stewart Smith).